1 引言

共轭梯度法是求解大规模光滑无约束优化问题

其中

或由强Wolfe线搜索获得

其中参数

该方法在强Wolfe搜索条件下(

显然,

显然,

2 算法描述

基于公式(1.9)和(1.10), 建立该文算法框架如下.

初始化. 任取初始点

步骤1 若

步骤2 由强Wolfe线搜索准则产生步长

步骤3 令

步骤4 令

为便于分析, 记IPRP方法和IHS方法分别为由参数公式

3 IPRP方法的性质及其全局收敛性

为获得算法的收敛性, 首先给出算法所需的两个常规假设条件.

(H1) 函数

(H2) 函数

下面引理所给出的是著名Zoutendijk条件[15], 其在共轭梯度法全局收敛性分析中起着非常重要的作用.

引理3.1 设假设(H1)和(H2)成立, 考虑一般迭代方法(1.1)–(1.2), 若搜索方向

对于无约束优化问题, 若存在常数

称搜索方向

接下来, 将分析IPRP算法的全局收敛性. 为此, 首先证明IPRP算法所产生的搜索方向在强Wolfe线搜索准则下均为充分下降的.

引理3.2 设假设(H2)成立, 且方向

证 当

利用(1.2)和(1.9)式, 立得

考虑到强Wolfe线搜索(1.4)的第二个不等式和归纳假设, 有

将(3.5)式代入(3.4)式, 可得

进而, 对(3.6)式利用

因此, 关系式(3.2)对所有的

由引理3.2可知, 当

引理3.3 设假设(H1)和(H2)成立, 对任意的

证 结合强Wolfe线搜索, (1.9)和(3.3)式, 再利用

令

证毕.

基于引理3.1, 3.2和3.3, 可建立IPRP方法的全局收敛性定理.

定理3.1 设假设(H1)和(H2)成立, 迭代点列

4 IHS方法的性质及其全局收敛性

下面将分析IHS方法的搜索方向在强Wolfe线搜索下满足充分下降条件(3.1).

引理4.1 设假设(H2)成立, 且搜索方向

进而, 关系式

证 利用数学归纳法证明. 当

假设对

此外, 由归纳假设易知

进一步, 利用(3.3)和(4.2)式, 可得

将(4.3)式的两边同除以

最后, 可建立IHS方法的全局收敛性定理.

定理4.1 设假设(H1)和(H2)成立, 迭代点列

证 由反证法. 若定理不成立, 注意到

将(4.4)式的两端同除以

注意到

这意味着

这与引理3.1的Zoutendijk条件矛盾. 证毕.

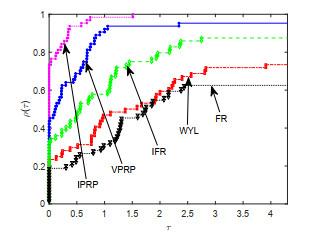

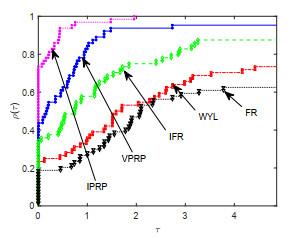

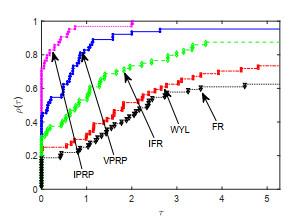

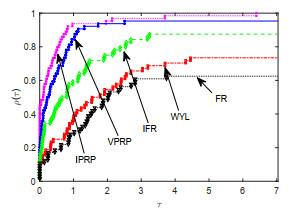

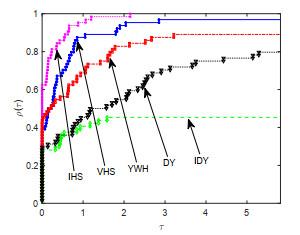

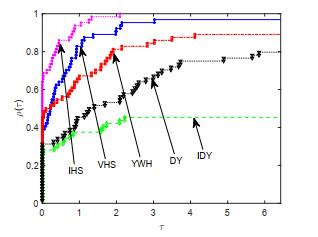

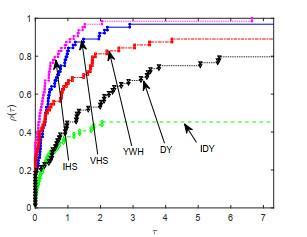

5 数值试验

为检验该文所提出的IPRP和IHS方法的实际数值效果, 两种方法的数值试验都测试了64个问题, 所有算例均取自于无约束优化测试问题集[16-17], 测试算例的规模从2到50000不等. 为便于比对数值效果, 将进行两组算法的数值试验, 即: 第一组为该文算法IPRP、算法FR[2]、算法WYL[6]、算法VPRP[9]和算法IFR[13]; 第二组为该文算法IHS、算法DY[5]、算法YWH[8]、算法VHS[9]和算法IDY[13]. 测试的环境为MATLAB R2017b, Windows 10操作系统, 计算机硬件为Inter(R) Core(TM) i5-8250U CPU 1.80 GHz和8 GB RAM. 所有测试都采用强Wolfe线搜索准则(1.4)获得步长, 参数选取为

在试验中, 分别对所测试算法的迭代次数(Itr), 目标函数函数值计算次数(NF), 梯度计算次数(NG), 计算时间(Tcpu) (单位为秒)4个重要指标进行观测和比较, 并列出算法终止时目标函数梯度的2-范数(

表 1 第一组方法数值试验报告

| 序号 | 算例 | IPRP | VPRP | WYL | IFR | FR |

| 算例名/维数 | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | |

| 1 | bdexp 10 | 3/1/3/0.005/2.27e-48 | 3/1/3/0.001/6.25e-49 | 3/1/3/0.000/6.25e-49 | 3/1/3/0.000/1.06e-48 | F/F/F/F/F |

| 2 | bdexp 100 | 3/2/3/0.000/1.33e-82 | 3/2/3/0.000/1.24e-82 | 3/2/3/0.000/1.24e-82 | 3/2/3/0.000/1.23e-82 | 4/4/4/0.001/8.22e-10 |

| 3 | bdexp 1000 | 3/2/3/0.001/4.45e-107 | 3/2/3/0.001/4.45e-107 | 3/2/3/0.002/4.45e-107 | 3/2/3/0.001/4.41e-107 | 3/2/3/0.001/3.25e-65 |

| 4 | bdexp 10000 | 3/2/3/0.009/1.14e-109 | 3/2/3/0.009/1.14e-109 | 3/2/3/0.009/1.14e-109 | 3/2/3/0.009/1.13e-109 | 3/2/3/0.007/5.74e-104 |

| 5 | bdexp 20000 | 3/2/3/0.019/1.07e-109 | 3/2/3/0.019/1.07e-109 | 3/2/3/0.018/1.07e-109 | 3/2/3/0.019/1.07e-109 | 3/2/3/0.022/8.65e-107 |

| 6 | exdenschnb 6 | 18/333/142/0.027/5.87e-06 | 21/424/199/0.031/2.28e-06 | F/F/F/F/F | 92/2469/1261/0.120/7.46e-06 | F/F/F/F/F |

| 7 | exdenschnb 8 | 18/333/142/0.016/6.77e-06 | 21/424/198/0.020/2.63e-06 | F/F/F/F/F | 81/2181/1132/0.103/9.46e-06 | F/F/F/F/F |

| 8 | himmelbg 200 | 3/6/7/0.001/7.14e-29 | 3/6/7/0.001/7.12e-29 | 3/6/7/0.001/7.12e-29 | 3/6/7/0.001/7.13e-29 | 3/6/7/0.001/2.78e-27 |

| 9 | himmelbg 1000 | 3/6/7/0.002/1.60e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/6.23e-27 |

| 10 | himmelbg 2000 | 3/6/7/0.002/2.26e-28 | 3/6/7/0.002/2.25e-28 | 3/6/7/0.002/2.25e-28 | 3/6/7/0.002/2.26e-28 | 3/6/7/0.002/8.81e-27 |

| 11 | himmelbg 5000 | 3/6/7/0.006/3.57e-28 | 3/6/7/0.004/3.56e-28 | 3/6/7/0.004/3.56e-28 | 3/6/7/0.005/3.57e-28 | 3/6/7/0.003/1.39e-26 |

| 12 | genquartic 1000 | 26/527/272/0.051/8.90e-06 | 49/1193/591/0.087/1.72e-06 | F/F/F/F/F | 135/3820/1894/0.262/7.72e-06 | F/F/F/F/F |

| 13 | genquartic 2000 | 24/465/195/0.041/3.68e-07 | 40/829/402/0.071/3.32e-07 | F/F/F/F/F | 63/1527/759/0.152/4.42e-06 | 80/1963/985/0.166/8.99e-06 |

| 14 | genquartic 3000 | 28/533/256/0.074/9.56e-07 | 39/819/394/0.110/4.38e-06 | 130/3558/1746/0.438/2.90e-06 | 109/2817/1389/0.343/3.04e-06 | 60/14 36/699/0.198/1.29e-06 |

| 15 | biggsb1 5 | 47/1099/508/0.060/3.03e-06 | 51/1260/580/0.058/3.30e-06 | 83/2167/1067/0.096/9.24e-06 | 101/2486/1235/0.114/3.20e-06 | 116/3006/1485/0.151/1.63e-06 |

| 16 | biggsb1 10 | 72/1771/868/0.097/3.99e-06 | 110/3065/1354/0.176/6.47e-06 | 321/9146/4502/0.464/6.68e-06 | 165/4546/2275/0.249/7.11e-06 | 260/73 83/3699/0.394/7.49e-06 |

| 17 | sinqua d 3 | 211/6309/2454/0.327/5.45e-06 | 141/3934/1661/0.167/8.82e-07 | F/F/F/F/F | 209/5635/2820/0.292/1.67e-06 | F/F/F/F/F |

| 18 | fletcbv3 10 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 |

| 19 | fletcbv3 20 | 123/2561/1320/0.158/5.54e-06 | 207/3854/1963/0.230/9.67e-06 | 144/3777/1938/0.208/9.27e-06 | 144/3549/1786/0.173/9.18e-06 | 235/6092/3110/0.287/8.70e-06 |

| 20 | nonscomp 50 | 69/1550/742/0.090/5.32e-06 | 350/10319/4581/0.511/8.98e-06 | 332/9490/4661/0.474/8.39e-06 | F/F/F/F/F | F/F/F/F/F |

| 21 | dixmaana 1500 | 15/159/64/0.217/9.53e-07 | 16/263/115/0.249/2.16e-06 | 56/1370/656/1.247/7.33e-06 | 54/1387/654/1.258/6.30e-06 | 63/1542/738/1.411/8.05e-06 |

| 22 | dixmaan b 1500 | 11/140/50/0.145/2.91e-06 | 11/140/50/0.120/2.90e-06 | 65/1613/757/1.566/6.91e-06 | 50/1278/623/1.139/5.80e-06 | F/F/F/F/F |

| 23 | dixmaanc 1500 | 60/1667/793/1.578/7.39e-06 | 21/430/199/0.406/3.25e-06 | F/F/F/F/F | 141/4104/2008/3.680/1.69e-06 | 49/1260/611/1.156/8.49e-06 |

| 24 | dixmaand 1500 | 63/1757/855/1.670/6.73e-06 | F/F/F/F/F | 59/1419/655/1.251/8.17e-06 | 75/1811/888/1.692/2.60e-06 | 54/1303/644/1.186/8.75e-06 |

| 25 | dixon3dq 20 | 521/14809/6503/0.737/4.61e-06 | 403/11099/5010/0.493/9.85e-06 | F/F/F/F/F | 411/11043/5496/0.490/8.31e-06 | F/F/F/F/F |

| 26 | dqdrtic 1000 | 89/2094/957/0.135/7.15e-06 | 123/3254/1469/0.195/7.20e-06 | 337/8603/4223/0.506/8.01e-06 | 145/3860/1933/0.236/7.33e-06 | F/F/F/F/F |

| 27 | dqdrtic 3000 | 115/2569/1149/0.318/2.11e-06 | 130/3633/1604/0.394/8.77e-06 | F/F/F/F/F | 267/7418/3623/0.882/4.49e-06 | 348/9565/4759/1.167/5.93e-06 |

| 28 | dqrtic 50 | 17/226/94/0.019/2.87e-06 | 23/340/152/0.025/9.97e-07 | 30/571/257/0.039/3.97e-06 | 37/718/334/0.047/3.17e-06 | 42/847/422/0.058/3.39e-06 |

| 29 | dqrtic 100 | 23/292/117/0.024/9.22e-07 | 37/740/350/0.059/4.05e-06 | 39/834/412/0.069/4.43e-06 | F/F/F/F/F | 56/1250/607/0.100/2.99e-06 |

| 30 | dqrtic 150 | 23/345/158/0.035/5.20e-06 | 27/473/221/0.047/7.25e-06 | 41/890/411/0.087/7.32e-06 | F/F/F/F/F | 55/1239/607/0.124/9.64e-06 |

| 31 | edensch 100 | 38/805/372/0.075/6.94e-06 | 37/747/358/0.066/4.94e-06 | F/F/F/F/F | F/F/F/F/F | F/F/F/F/F |

| 32 | edensch 200 | 39/837/402/0.099/7.72e-06 | 41/894/409/0.103/3.57e-06 | 586/17937/8632/2.164/6.27e-06 | 90/2221/1066/0.257/2.92e-06 | 203/5577/2766/0.698/6.38e-06 |

| 33 | edensch 1000 | 53/1251/618/0.559/8.40e-06 | 57/1412/678/0.604/6.60e-06 | 212/6028/2941/2.500/9.89e-06 | F/F/F/F/F | 121/3187/1554/1.336/9.97e-06 |

| 34 | fletchcr 10 | 92/2330/1063/0.122/4.66e-06 | 137/3824/1720/0.198/5.62e-06 | 181/4817/2375/0.239/9.63e-06 | 130/3342/1649/0.161/8.36e-06 | F/F/F/F/F |

| 35 | fletchcr 100 | 63/1570/739/0.072/4.99e-06 | 126/3389/1561/0.153/6.47e-06 | 200/5116/2552/0.272/2.95e-06 | 136/3723/1839/0.194/8.70e-06 | F/F/F/F/F |

| 36 | liarwhd 10 | 85/2053/931/0.126/4.38e-06 | 118/3128/1461/0.153/6.45e-06 | 67/1637/822/0.079/7.80e-06 | 143/3622/1726/0.174/1.86e-06 | F/F/F/F/F |

| 37 | liarwhd 10 | 85/2053/931/0.102/4.38e-06 | 118/3128/1461/0.152/6.45e-06 | 67/1637/822/0.079/7.80e-06 | 143/3622/1726/0.175/1.86e-06 | F/F/F/F/F |

| 38 | liarwhd 20 | 54/1201/548/0.059/2.51e-06 | 83/2005/946/0.096/3.67e-06 | F/F/F/F/F | 112/2678/1341/0.132/6.28e-06 | F/F/F/F/F |

| 39 | penalt y1 1000 | 14/243/93/0.755/2.34e-06 | 14/243/93/0.753/2.34e-06 | 20/440/186/1.402/8.67e-07 | 14/243/93/0.702/2.34e-06 | F/F/F/F/F |

| 40 | penalt y1 2000 | 10/129/39/1.282/5.53e-06 | 10/129/39/1.295/5.53e-06 | 19/421/183/4.409/1.67e-07 | 13/220/81/2.262/7.43e-07 | 14/233/82/2.331/1.04e-06 |

| 41 | power1 30 | 390/11133/4828/0.479/7.51e-06 | F/F/F/F/F | F/F/F/F/F | 554/14994/7484/0.661/7.38e-06 | F/F/F/F/F |

| 42 | power1 50 | 974/27796/12081/1.271/7.98e-06 | F/F/F/F/F | F/F/F/F/F | 844/22960/11507/1.063/7.28e-06 | F/F/F/F/F |

| 43 | quartc 20 | 17/218/97/0.015/1.93e-06 | 25/436/214/0.024/3.39e-06 | 32/624/296/0.034/5.70e-06 | 21/369/172/0.022/9.11e-06 | 21/348/170/0.023/5.01e-06 |

| 44 | quartc 100 | 23/292/117/0.034/9.22e-07 | 37/740/350/0.075/4.05e-06 | 39/834/412/0.068/4.43e-06 | F/F/F/F/F | 56/1250/607/0.099/2.99e-06 |

| 45 | tridia 5 | 90/2208/1021/0.131/7.47e-06 | 91/2347/1098/0.111/5.04e-06 | 233/6288/3116/0.288/9.52e-06 | 132/3694/1822/0.167/9.45e-06 | 178/4808 /2347/0.224/8.08e-06 |

| 46 | raydan2 1000 | 11/173/64/0.025/6.93e-06 | 11/173/59/0.015/6.97e-06 | 13/235/98/0.019/5.88e-06 | 11/173/64/0.015/6.93e-06 | F/F/F/F/F |

| 47 | raydan2 5000 | 15/270/119/0.068/8.63e-07 | 12/204/71/0.052/8.98e-06 | F/F/F/F/F | 13/235/81/0.071/7.52e-06 | F/F/F/F/F |

| 48 | raydan2 9000 | 13/235/89/0.119/3.57e-06 | 14/236/80/0.122/8.17e-07 | F/F/F/F/F | 12/204/73/0.105/9.68e-06 | F/F/F/F/F |

| 49 | diagonal 1 12 | 105/3134/1528/0.152/6.47e-06 | 105/3134/1528/0.138/6.46e-06 | 178/5045/2412/0.254/8.59e-06 | 123/3267/1606/0.175/2.63e-06 | 154/4290/2074/0.188/9.47e-06 |

| 50 | diagonal 2 20 | 110/3291/1615/0.167/7.74e-07 | 112/3352/1629/0.152/1.21e-06 | 177/4865/2381/0.234/8.10e-06 | 91/2436/1199/0.109/7.14e-06 | 158/4381/2169/0.195/6.73e-06 |

| 51 | diagonal2 100 | 86/2332/1156/0.125/9.74e-06 | 138/3993/1881/0.226/5.03e-06 | 258/7007/3440/0.367/5.18e-06 | 142/3889/1927/0.228/4.61e-06 | 314/8540/4265/0.457/3.56e-06 |

| 52 | diagonal 3 20 | 94/2642/1291/0.153/4.23e-06 | 92/2518/1239/0.173/6.97e-06 | 198/5426/2744/0.254/7.35e-06 | 136/3671/1808/0.169/8.58e-06 | 297/8635/4233/0.389/8.19e-06 |

| 53 | diagonal 3 40 | 78/1848/864/0.088/8.04e-06 | 70/1662/798/0.082/2.75e-06 | 330/9184/4561/0.436/8.35e-06 | F/F/F/F/F | 166/4212/2153/0.221/7.77e-06 |

| 54 | bv 1000 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 |

| 55 | bv 10000 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 |

| 56 | ie 50 | 12/193/77/0.255/6.88e-06 | 11/166/63/0.178/4.87e-06 | 42/1006/516/1.155/2.93e-06 | 30/598/294/0.676/7.83e-06 | 49/1249/599/1.258/9.32e-06 |

| 57 | ie 200 | 14/225/98/3.642/3.11e-06 | 11/166/64/2.631/9.62e-06 | 58/1475/739/24.086/9.66e-06 | 42/961/446/15.469/6.32e-06 | 37/834/424/13.787/4.12e-06 |

| 58 | gauss 3 | 8/142/65/0.018/3.85e-06 | 8/142/64/0.014/3.78e-06 | 35/912/446/0.082/2.70e-06 | 5/51/16/0.004/2.43e-06 | 27/699/363/0.055/3.86e-06 |

| 59 | kowosb 4 | 403/11443/5023/0.678/5.35e-06 | 501/13830/6067/0.789/6.93e-06 | F/F/F/F/F | 241/6791/3346/0.387/8.22e-06 | F/F/F/F/F |

| 60 | lin 500 | 2/2/2/0.021/9.93e-14 | 2/2/2/0.018/9.93e-14 | 2/2/2/0.020/9.93e-14 | 2/2/2/0.016/9.93e-14 | 2/2/2/0.016/9.93e-14 |

| 61 | rosex 50 | 454/13379/5609/0.780/9.65e-06 | 518/14946/6508/1.015/8.36e-06 | F/F/F/F/F | F/F/F/F/F | F/F/F/F/F |

| 62 | trid 20 | 101/2542/1164/0.206/4.07e-06 | 111/2785/1293/0.253/4.46e-06 | 717/20092/10002/1.691/9.73e-06 | 173/4652/2343/0.360/9.95e-06 | 449/12935/6383/1.034/9.91e-06 |

| 63 | vardim 5 | 10/137/43/0.011/5.41e-07 | 10/137/43/0.008/5.41e-07 | 13/228/96/0.013/9.27e-07 | 10/137/43/0.008/5.41e-07 | 14/228/88/0.017/5.31e-07 |

| 64 | watson 4 | 106/2780/1261/0.345/9.27e-06 | 190/5428/2430/0.585/9.92e-06 | F/F/F/F/F | 138/3688/1822/0.368/9.18e-06 | F/F/F/F/F |

表 2 第二组方法数值试验报告

| 序号 | 算例 | IHS | VHS | YWH | IDY | DY |

| 算例名/维数 | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | Itr/NF/NG/Tcpu/||gk|| | |

| 1 | bdexp 10 | 3/1/3/0.001/2.25e-48 | 3/1/3/0.001/5.63e-49 | 3/1/3/0.001/5.63e-49 | 3/1/3/0.001/9.95e-49 | 3/1/3/0.000/8.27e-54 |

| 2 | bdexp 100 | 3/2/3/0.001/1.33e-82 | 3/2/3/0.001/1.24e-82 | 3/2/3/0.001/1.24e-82 | 3/2/3/0.001/1.22e-82 | 3/2/3/0.001/2.02e-83 |

| 3 | bdexp 1000 | 3/2/3/0.002/4.45e-107 | 3/2/3/0.001/4.45e-107 | 3/2/3/0.001/4.45e-107 | 3/2/3/0.001/4.40e-107 | 3/2/3/0.001/3.49e-107 |

| 4 | bdexp 10000 | 3/2/3/0.012/1.14e-109 | 3/2/3/0.009/1.14e-109 | 3/2/3/0.006/1.14e-109 | 3/2/3/0.005/1.13e-109 | 3/2/3/0.005/1.11e-109 |

| 5 | bdexp 20000 | 3/2/3/0.015/1.07e-109 | 3/2/3/0.017/1.07e-109 | 3/2/3/0.019/1.07e-109 | 3/2/3/0.020/1.07e-109 | 3/2/3/0.018/1.06e-109 |

| 6 | exdenschnb 6 | 27/575/270/0.031/6.48e-07 | 46/1205/572/0.060/5.91e-06 | 85/2160/1080/0.102/9.61e-06 | F/F/F/F/F | 45/1082/500/0.049/2.80e-06 |

| 7 | exdenschnb 8 | 27/575/270/0.035/7.48e-07 | 46/1205/581/0.070/6.83e-06 | 82/2086/1040/0.099/9.94e-06 | F/F/F/F/F | 45/1082/521/0.050/3.23e-06 |

| 8 | himmelbg 200 | 3/6/7/0.001/7.14e-29 | 3/6/7/0.001/7.12e-29 | 3/6/7/0.001/7.12e-29 | 3/6/7/0.001/7.13e-29 | 3/6/7/0.001/6.93e-29 |

| 9 | himmelbg 1000 | 3/6/7/0.001/1.60e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/1.59e-28 | 3/6/7/0.001/1.55e-28 |

| 10 | himmelbg 2000 | 3/6/7/0.001/2.26e-28 | 3/6/7/0.001/2.25e-28 | 3/6/7/0.001/2.25e-28 | 3/6/7/0.001/2.25e-28 | 3/6/7/0.001/2.19e-28 |

| 11 | himmelbg 5000 | 3/6/7/0.003/3.57e-28 | 3/6/7/0.003/3.56e-28 | 3/6/7/0.003/3.56e-28 | 3/6/7/0.004/3.57e-28 | 3/6/7/0.004/3.47e-28 |

| 12 | genquartic 200 | 21/337/151/0.021/2.29e-06 | 38/872/413/0.058/5.45e-06 | 92/2398/1194/0.124/6.62e-06 | 63/1571/737/0.087/3.97e-06 | 821/19378 /9592/1.049/9.80e-06 |

| 13 | genquartic 500 | 30/599/289/0.033/5.23e-06 | 36/712/351/0.047/4.93e-06 | 65/1501/760/0.112/1.08e-06 | 78/1989/962/0.114/9.02e-06 | 645/17776/8774/1.104/9.29e-06 |

| 14 | genquartic 1000 | 26/497/224/0.034/2.70e-06 | 32/582/258/0.038/4.41e-06 | 149/4040/1993/0.282/6.24e-06 | F/F/F/F/F | 918/25177/12373/1.652/9.83e-06 |

| 15 | biggsb1 10 | 92/2354/1072/0.137/8.90e-06 | 99/2590/1194/0.130/4.88e-06 | 93/2353/1157/0.118/6.10e-06 | F/F/F/F/F | 300/7576/3703/0.363/9.64e-06 |

| 16 | biggsb1 20 | 223/6267/2797/0.328/4.70e-06 | 231/6441/2952/0.310/9.38e-06 | 141/3413/1740/0.158/9.96e-06 | F/F/F/F/F | 585/15571/7647/0.817/6.94e-06 |

| 17 | fletcbv3 10 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 | 1/1/1/0.000/5.97e-06 |

| 18 | nonscomp 50 | 71/1796/850/0.118/6.23e-06 | 49/988/455/0.047/4.92e-06 | F/F/F/F/F | F/F/F/F/F | F/F/F/F/F |

| 19 | dixmaana 1500 | 15/177/73/0.187/8.55e-06 | 13/184/61/0.156/5.51e-06 | F/F/F/F/F | 18/267/110/0.237/2.08e-06 | 25/423/187/0.394/2.57e-06 |

| 20 | dixmaan b 1500 | 11/140/44/0.147/2.91e-06 | 11/140/53/0.128/2.90e-06 | 18/316/132/0.297/9.49e-06 | 10/117/35/0.097/7.97e-06 | 13/172/69/0.155/5.31e-07 |

| 21 | dixmaanc 1500 | 20/324/142/0.330/1.63e-06 | 24/400/169/0.369/4.89e-07 | F/F/F/F/F | 29/579/278/0.526/2.10e-06 | F/F/F/F/F |

| 22 | dixmaand 1500 | 20/303/137/0.316/9.11e-06 | 18/297/129/0.272/7.43e-06 | 59/1312/656/1.246/7.34e-06 | F/F/F/F/F | F/F/F/F/F |

| 23 | dixmaanf 1500 | 384/10668/4805/9.600/6.61e-06 | 328/8716/3884/7.877/6.86e-06 | 368/9331/4633/8.518/9.40e-06 | F/F/F/F/F | 569/13296/6208/12.037/9.45e-06 |

| 24 | dixmaang 1500 | 462/12937/5721/11.405/6.91e-06 | 349/9644/4160/8.895/9.67e-06 | 251/6303/3170/5.904/7.43e-06 | F/F/F/F/F | F/F/F/F/F |

| 25 | dixmaanh 1500 | 340/8992/4020/8.074/9.24e-06 | 443/12355/5798/11.331/6.07e-06 | 253/6592/3315/6.061/9.63e-06 | F/F/F/F/F | 545/12688/6055/11.547/9.72e-06 |

| 26 | dqdrtic 500 | 112/2969/1315/0.169/4.71e-06 | 808/24158/10585/1.257/6.11e-06 | 234/5935/2961/0.308/8.73e-06 | F/F/F/F/F | 332/7838/3899/0.402/8.00e-06 |

| 27 | dqdrtic 1000 | 448/12853/5663/0.829/6.15e-06 | 677/20184/8818/1.191/7.38e-06 | 195/5120/2524/0.303/6.75e-06 | F/F/F/F/F | 356/9315/4555/0.572/9.28e-06 |

| 28 | dqdrtic 5000 | 211/5452/2496/0.871/9.23e-06 | 786/23433/10290/3.794/7.65e-06 | 329/9479/4559/1.520/7.36e-06 | F/F/F/F/F | 355/9103/4407/1.442/9.96e-06 |

| 29 | edensch 200 | 40/923/459/0.120/3.76e-06 | 37/773/344/0.090/3.17e-06 | 99/2586/1330/0.303/2.67e-06 | F/F/F/F/F | F/F/F/F/F |

| 30 | edensch 500 | 48/1121/538/0.261/5.01e-06 | 48/1121/507/0.250/9.24e-06 | F/F/F/F/F | F/F/F/F/F | 646/14842/7011/3.463/9.95e-06 |

| 31 | fletchcr 20 | 96/2510/1184/0.133/3.12e-06 | 122/3213/1517/0.168/4.57e-06 | 100/2485/1271/0.143/5.84e-06 | F/F/F/F/F | 720/19851/9712/0.967/9.81e-06 |

| 32 | fletchcr 50 | 108/2811/1292/0.143/9.45e-06 | 111/2937/1382/0.131/8.33e-06 | 70/1702/811/0.104/1.88e-06 | F/F/F/F/F | 353/9180/4634/0.447/8.43e-06 |

| 33 | genrose 40000 | 394/11155/4844/14.998/1.15e-06 | 484/13780/6083/18.593/9.07e-06 | 617/16168/8104/22.158/6.56e-06 | F/F/F/F/F | F/F/F/F/F |

| 34 | genrose 50000 | 239/6311/2783/10.298/6.13e-06 | 307/8594/3772/13.857/3.30e-06 | 726/18954/9375/33.155/4.74e-06 | F/F/F/F/F | F/F/F/F/F |

| 35 | liarwhd 10 | 75/1884/846/0.104/7.13e-06 | 92/2380/1068/0.121/9.74e-06 | 81/1932/948/0.098/1.58e-06 | F/F/F/F/F | 169/4333/2143/0.236/7.07e-06 |

| 36 | liarwhd 20 | 91/2350/1048/0.123/1.68e-06 | 147/3927/1783/0.204/4.36e-06 | 71/1791/870/0.101/1.15e-06 | F/F/F/F/F | 158/3925/1941/0.244/2.74e-06 |

| 37 | penalt y1 1000 | 14/243/93/0.791/2.34e-06 | 14/243/93/0.758/2.34e-06 | 19/406/179/1.333/9.37e-07 | 19/406/177/1.367/9.37e-07 | 14/243/93/0.750/2.34e-06 |

| 38 | penalt y1 2000 | 10/129/39/1.354/5.53e-06 | 10/129/39/1.301/5.53e-06 | 18/385/157/4.370/3.61e-08 | 18/385/157/4.268/3.61e-08 | 10/129/39/1.302/5.53e-06 |

| 39 | penalt y1 5000 | 11/152/50/11.744/3.01e-06 | 11/152/50/11.720/3.01e-06 | 10/156/50/12.004/4.03e-06 | 10/156/50/11.701/4.03e-06 | 11/152/51/11.610/3.01e-06 |

| 40 | quartc 20 | 12/131/50/0.012/1.48e-06 | 15/193/77/0.013/6.78e-07 | 25/465/214/0.027/7.65e-06 | 27/554/243/0.031/4.83e-06 | 42/907/438/0.049/8.77e-06 |

| 41 | quartc 100 | 21/268/112/0.034/2.55e-06 | 22/298/107/0.042/5.43e-06 | F/F/F/F/F | 40/835/412/0.077/7.91e-06 | F/F/F/F/F |

| 42 | tridia 10 | 93/2351/1061/0.138/6.02e-06 | 160/4450/1973/0.215/5.81e-06 | 143/3824/1885/0.193/6.63e-06 | F/F/F/F/F | 509/13202/6489/0.659/6.52e-06 |

| 43 | tridia 30 | 243/6517/2942/0.339/7.01e-06 | 244/6428/2942/0.362/8.75e-06 | 229/6007/2989/0.310/9.35e-06 | F/F/F/F/F | F/F/F/F/F |

| 44 | raydan 1 50 | 54/1229/560/0.067/4.85e-06 | 65/1361/679/0.071/1.69e-06 | 91/2358/1158/0.114/5.16e-06 | F/F/F/F/F | 685/15740/7582/0.776/9.90e-06 |

| 45 | raydan 1 80 | 69/1547/712/0.072/8.33e-06 | 98/2546/1222/0.123/8.71e-06 | 107/2819/1366/0.139/2.74e-06 | F/F/F/F/F | 602/13450/6380/0.789/9.59e-06 |

| 46 | raydan2 1000 | 11/173/64/0.018/6.93e-06 | 11/173/66/0.014/6.95e-06 | 11/173/65/0.014/6.93e-06 | 11/173/65/0.014/6.95e-06 | 11/173/65/0.027/6.95e-06 |

| 47 | raydan2 4000 | 12/204/79/0.055/9.01e-06 | 13/235/109/0.062/4.97e-06 | 15/268/96/0.063/4.95e-07 | 16/301/98/0.067/1.75e-06 | 15/271/92/0.079/7.57e-07 |

| 48 | raydan2 10000 | 13/208/79/0.135/6.37e-09 | 14/236/90/0.139/2.02e-06 | 14/238/92/0.155/5.95e-07 | 15/272/115/0.157/1.62e-06 | 12/204/72/0.126/8.83e-06 |

| 49 | diagonal 1 50 | 156/4632/2239/0.252/5.98e-06 | 168/5006/2447/0.314/9.21e-06 | 106/2632/1279/0.134/5.76e-06 | F/F/F/F/F | F/F/F/F/F |

| 50 | diagonal2 200 | 168/4488/2112/0.294/6.79e-06 | 232/6717/3155/0.423/5.53e-06 | 156/3915/1904/0.264/3.42e-06 | F/F/F/F/F | 901/23693/11745/1.642/9.54e-06 |

| 51 | diagonal2 800 | 468/13834/6665/1.469/9.51e-06 | 460/13514/6589/1.395/4.10e-06 | 297/8009/3930/0.846/7.15e-06 | F/F/F/F/F | F/F/F/F/F |

| 52 | diagonal 3 5 | 82/2438/1160/0.146/4.57e-06 | 82/2438/1151/0.130/4.58e-06 | 90/2387/1194/0.115/7.49e-06 | 74/1924/927/0.106/6.19e-06 | 322/8250 /4083/0.460/9.78e-06 |

| 53 | diagonal 3 20 | 107/3017/1401/0.150/8.29e-06 | 117/3398/1613/0.174/7.60e-06 | 91/2344/1142/0.131/8.12e-06 | F/F/F/F/F | 542/13718/6653/0.701/9.80e-06 |

| 54 | bv 1000 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 | 1/1/1/0.000/4.99e-06 |

| 55 | bv 10000 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 | 1/1/1/0.000/5.00e-08 |

| 56 | ie 100 | 12/193/85/0.894/9.38e-06 | 11/166/75/0.729/2.85e-06 | 70/1873/917/8.274/4.81e-06 | F/F/F/F/F | 71/1838/904/7.827/9.92e-06 |

| 57 | ie 200 | 14/225/89/3.598/2.89e-06 | 11/166/67/2.651/4.02e-06 | 102/2876/1443/48.512/3.88e-06 | 15/207/104/3.495/2.82e-06 | 62/1551/711/25.058/5.32e-06 |

| 58 | singx 10 | 174/4588/2004/0.307/2.52e-06 | 618/18316/7284/1.186/8.99e-06 | 334/8361/4166/0.620/7.59e-06 | F/F/F/F/F | 731/19522/9716/1.219/9.55e-06 |

| 59 | singx 150 | 175/4802/2117/0.758/6.53e-06 | 720/21252/8241/3.513/9.69e-06 | 601/15530/7775/2.721/3.25e-06 | F/F/F/F/F | 228/5911/2866/1.009/4.27e-06 |

| 60 | beale 2 | 134/3736/1642/0.201/7.28e-06 | 203/5839/2717/0.305/3.43e-06 | F/F/F/F/F | F/F/F/F/F | F/F/F/F/F |

| 61 | froth 2 | 933/26993/11996/1.634/9.76e-06 | F/F/F/F/F | F/F/F/F/F | F/F/F/F/F | 210/6330/3026/0.396/9.06e-06 |

| 62 | lin 100 | 2/2/2/0.007/3.13e-14 | 2/2/2/0.004/3.13e-14 | 2/2/2/0.003/3.13e-14 | 2/2/2/0.002/3.13e-14 | 2/2/2/0.002/3.13e-14 |

| 63 | lin 500 | 2/2/2/0.027/9.93e-14 | 2/2/2/0.019/9.93e-14 | 2/2/2/0.019/9.93e-14 | 2/2/2/0.016/9.93e-14 | 2/2/2/0.024/9.93e-14 |

| 64 | trid 120 | 963/28247/12176/6.211/6.81e-06 | F/F/F/F/F | 461/11845/5899/2.515/6.45e-06 | F/F/F/F/F | F/F/F/F/F |

图 1

图 2

图 3

图 4

图 5

图 6

图 7

图 8

参考文献

Method of conjugate gradient for solving linear system

DOI:10.6028/jres.049.044 [本文引用: 1]

Function minimization by conjugate gradients

DOI:10.1093/comjnl/7.2.149 [本文引用: 2]

Note sur la convergence de méthodes de directions conjugées

The conjugate gradient method in extreme problems

DOI:10.1016/0041-5553(69)90035-4 [本文引用: 1]

A nonlinear conjugate gradient method with a strong global convergence property

DOI:10.1137/S1052623497318992 [本文引用: 2]

The convergence properties of some new conjugate gradient methods

The proof of the sufficient descent condition of the Wei-Yao-Liu conjugate gradient method under the strong Wolfe-Powell line search

A note about WYL's conjugate gradient method and its applications

An imporoved Wei-Yao-Liu nonlinear conjugate gradient method for optimization computation

A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search

一类修正的非单调谱共轭梯度法及其在非负矩阵分解中的应用

DOI:10.3969/j.issn.1003-3998.2018.05.012

A class of modified non-monotonic spectral conjugate gradient method and applications to non-negative matrix factorization

DOI:10.3969/j.issn.1003-3998.2018.05.012

具有充分下降性的两个共轭梯度法

Two conjugate gradient methods with sufficient descent property

Improved Fletcher-Reeves and Dai-Yuan conjugate gradient methods with the strong Wolfe line search

Global covergence properties of conjugate gradient method for optimization

Testing unconstrained optimization software

DOI:10.1145/355934.355936 [本文引用: 1]

CUTE: constrained and unconstrained testing environments

DOI:10.1145/200979.201043 [本文引用: 1]

Benchmarking optimization software with performance profiles

DOI:10.1007/s101070100263 [本文引用: 1]