Interpretation of report on cardiovascular health and diseases in China 2022

1

2023

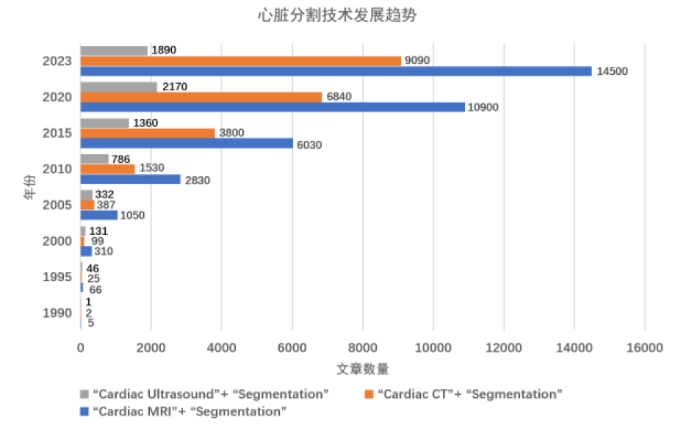

... 根据《中国心血管疾病与健康报告2022》要点解读[1]统计,我国现存心血管疾病(Cardiovascular Disease,CVD)患者达到3.3亿.随着人口老龄化加剧,CVD在我国的影响日益突出,高死亡率和巨大的经济负担引起了大量研究者的关注.心脏主要由被心肌包裹着的左心室(Left Ventricle,LV)、右心室(Right Ventricle,RV)、左心房(Left Atrium,LA)和右心房(Right Atrium,RA)组成.借助医学成像技术了解心脏结构及功能是临床常规诊断方式.目前常用的成像技术主要有X射线计算机断层扫描(Computed Tomography,CT)、心脏磁共振成像(Cardiac Magnetic Resonance Imaging,CMRI)和超声心动图(Ultrasonic Cardiogram,UCG).CT通过利用计算机重建X射线投影数据进行断层成像,速度快、空间分辨率高,此外,CTA(CT Angiography)将CT增强技术与薄层、大范围、快速扫描技术相结合,清晰显示全身各部位血管细节,但X射线会对人体产生电离辐射,不适于检查身体较差的CVD患者.CMRI基于核磁共振原理,配合不同的梯度磁场可从任意方位成像,软组织对比度高.其中,短轴电影CMRI心室内部血液与心肌组织对比度高,可动态地展示心室甚至心房在心动周期内不同时相的变化,常用于心脏分割及功能分析. 例如,通过分割心室心肌可以计算不同时相血液体积变化,从而计算射血分数和充盈曲线,进一步追踪心肌运动以及分析心肌应力变化等;通过分割心房则可以计算不同时相心房体积,以评估心房负荷以及诊断是否患有房颤.UCG可实现心脏实时成像,能够捕捉心脏的运动细节,便于心脏异常功能的检查以及指导心脏手术和介入治疗过程. ...

《中国心血管健康与疾病报告2022》要点解读

1

2023

... 根据《中国心血管疾病与健康报告2022》要点解读[1]统计,我国现存心血管疾病(Cardiovascular Disease,CVD)患者达到3.3亿.随着人口老龄化加剧,CVD在我国的影响日益突出,高死亡率和巨大的经济负担引起了大量研究者的关注.心脏主要由被心肌包裹着的左心室(Left Ventricle,LV)、右心室(Right Ventricle,RV)、左心房(Left Atrium,LA)和右心房(Right Atrium,RA)组成.借助医学成像技术了解心脏结构及功能是临床常规诊断方式.目前常用的成像技术主要有X射线计算机断层扫描(Computed Tomography,CT)、心脏磁共振成像(Cardiac Magnetic Resonance Imaging,CMRI)和超声心动图(Ultrasonic Cardiogram,UCG).CT通过利用计算机重建X射线投影数据进行断层成像,速度快、空间分辨率高,此外,CTA(CT Angiography)将CT增强技术与薄层、大范围、快速扫描技术相结合,清晰显示全身各部位血管细节,但X射线会对人体产生电离辐射,不适于检查身体较差的CVD患者.CMRI基于核磁共振原理,配合不同的梯度磁场可从任意方位成像,软组织对比度高.其中,短轴电影CMRI心室内部血液与心肌组织对比度高,可动态地展示心室甚至心房在心动周期内不同时相的变化,常用于心脏分割及功能分析. 例如,通过分割心室心肌可以计算不同时相血液体积变化,从而计算射血分数和充盈曲线,进一步追踪心肌运动以及分析心肌应力变化等;通过分割心房则可以计算不同时相心房体积,以评估心房负荷以及诊断是否患有房颤.UCG可实现心脏实时成像,能够捕捉心脏的运动细节,便于心脏异常功能的检查以及指导心脏手术和介入治疗过程. ...

Image segmentation by using thresholding techniques for medical images

1

2016

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

Efficient liver segmentation using a level-set method with optimal detection of the initial liver boundary from level-set speed images

1

2007

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

Liver segmentation based on region growing and level set active contour model with new signed pressure force function

1

2020

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

A novel approach for lung nodules segmentation in chest CT using level sets

1

2013

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

A level-set approach to joint image segmentation and registration with application to CT lung imaging

1

2018

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

Automatic seeded region growing image segmentation for medical image segmentation: a brief review

1

2020

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

Active contour model based on local and global intensity information for medical image segmentation

1

2016

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

Multi-atlas segmentation of biomedical images: a survey

1

2015

... 传统医学图像分割方法主要基于图像自身的阈值、边缘和区域.其中,基于阈值的分割算法通过设定灰度阈值将图像划分为不同区域,可按照任务需求制定约束准则以实现最佳阈值的确定,常采用自适应阈值分割法、直方图双峰法以及迭代阈值分割法,这类算法简单易用,适于二值化处理[2],但对噪声敏感且图像质量要求高.基于边缘的分割算法可以处理不规则轮廓的图像,主要依据颜色、灰度和纹理等特征的显著变化,通过一阶导数的极值和二阶导数的过零点来确定边缘,常用水平集、边缘增强、Canny算子等,在进行肝脏和肺等大器官分割时效果较好[3⇓⇓-6].基于区域的分割算法根据像素相似性、利用不同的统计方法进行像素聚集、边缘确定,主要有区域生长、活动轮廓模型、聚类、图谱等算法[7⇓-9].总结这些方式在图像处理中的应用,传统分割方法运行速率高,不需要额外的数据标签进行监督;但是缺乏对图像特征的自动提取能力,并且在分割过程中需要手动介入且采用多个算法集成的方式优化轮廓,鲁棒性较差. ...

GVFOM: a novel external force for active contour based image segmentation

1

2020

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

Left ventricle segmentation via two-layer level sets with circular shape constraint

3

2017

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

... [11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

Hybrid segmentation of left ventricle in cardiac MRI using gaussian-mixture model and region restricted dynamic programming

1

2013

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

Multi-atlas segmentation with augmented features for cardiac MR images

3

2015

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

... [13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

Left atrial segmentation combining multi-atlas whole heart labeling and shape-based atlas selection

3

2019

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

... [14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

Active contours without edges

1

2001

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

A fast region-based active contour model for boundary detection of echocardiographic images

1

2012

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

A method for segmenting cardiac magnetic resonance images using active contours

1

2012

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

一种基于主动轮廓模型的心脏核磁共振图像分割方法

1

2012

... 应用于CMRI分割的传统算法主要有:主动轮廓[10]、水平集[11]、动态规划[12]以及基于图谱[13,14]的模型.其中,主动轮廓模型(Active Contour Model,ACM)是最有影响力的计算机视觉技术之一,已成功应用于分割等各种图像分析任务,该算法首先在图像中初始化一个封闭轮廓并定义能量函数,其中内部能量惩罚轮廓弯曲程度,外部能量驱动轮廓向目标收敛,基于能量最小化原理,优化能量函数来找到图像中目标对象的边界. 在ACM变体中,引入概率统计和先验轮廓等方式可以优化迭代过程动态演化,但由于ACM基于几何特征和物理原理,初始轮廓、能量泛函参数以及图像特征都对分割结果影响很大.Chan等[15]提出新的轮廓模型,使用具有图像去噪和边缘保持的停止项代替传统的图像梯度,在处理弱边缘图像时更具鲁棒性;Saini等[16]在此基础上使用Newton-Raphson方法代替Chan提出的停止项,实现LV的快速分割.Liu等[17]构造了ACM广义法向有偏梯度矢量流外力模型,该模型具有更大捕捉范围和更强抗噪能力,在解决弱边界泄露问题上性能突出,最后引入圆形约束能量项克服乳头肌导致的局部极小问题. ...

An improved double level set algorithm for left ventricular segmentation of cardiac MRI images

2

2022

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

... [18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

改进的双水平集心脏MRI图像左心室分割算法

2

2022

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

... [18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

Fully automated left atrium cavity segmentation from 3D GE-MRI by multi-atlas selection and registration

1

2019

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

Segmentation of right ventricle in cardiac cine MRI using COLLATE fusion-based multi-atlas

1

2018

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

基于COLLATE融合多图谱的心脏电影MRI右心室分割

1

2018

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

A new method of multi- atlas segmentation of right ventricle based on cardiac film magnetic resonance images

1

2019

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

基于心脏电影磁共振图像的一种新的右心室多图谱分割方法

1

2019

... 水平集算法将图像分割演化过程用偏微分方程来描述,通过对方程求解来实现曲线的演化.在求解方程时,通常采用迭代法、有限元等方式.Yang等[11]与Zhao等[18]实现两层水平集分别提取LV内外膜.Yang等[11]利用距离正则化优化解剖几何形状,利用圆拟合项来检测心内膜,通过对演变轮廓施加惩罚,解决了基底切片中存在边缘流出以及小梁肌和心肌之间强度重叠的问题;Zhao等[18]优化LV能量函数和正则化函数以更好地约束外轮廓演化,在加快曲线演化过程的同时避免出现震荡,其中外膜分割对比距离正则化水平集(Distance Regularized Level Set Evolution,DRLSE)模型精度显著提升.基于图谱的算法[13,14]是利用多幅图像与目标图像配准的仿射变换并结合图像融合实现分割.Bai等[13]将灰度、梯度和背景信息合并成一个增强特征向量以提供更全面的图像特征描述,并融合支持向量机优化标签,避免传统图谱分割只关注局部特征而导致的边缘不连续,从而提高左心肌分割精度.Nuñez-Garcia等[14]基于钆增强磁共振图像根据LA形状对图谱进行分类,最后与目标图像进行配准分割,相比随机选取图谱,该方式选取最优图谱集,减少形状差异从而提高配准的精度;而Qiao等[19]则将钆增强磁共振图像转化为概率图,选择恰当的图谱进行标签融合,最后利用水平集修正边缘.Wang等[20]提出COLLATE(Consensus Level Labeler Accuracy and Truth Estimation)融合多图谱的RV分割方法,在对目标图像和图谱集进行B样条配准获得粗分割结果的基础上采用COLLATE进行融合,最后采用区域生长修正数据得到最优分割结果,在计算射血分数时具有更高的相关性和一致性,在此基础上,Su等[21]使用仿射传播算法获取图谱集,依次采用仿射变换和Diffeomorphic demons算法对目标图像进行配准,对30例临床数据进行回顾性分析,相比卷积神经网络(Convolutional Neural Network,CNN),在收缩末期精度更接近专家手动分割结果. ...

2

2018

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

... [22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

2

2018

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

... [23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

Fuzzy level set segmentation method for cardiac CT image sequence

1

2015

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

模糊水平集心脏CT图像序列分割方法

1

2015

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

Cardiac CT segmentation based on distance regularized level set

2

2022

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

... Summary of different segmentation networks based on cardiac CT images

Table 3 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| CNN[93] | 2016 | - | 60例临床病例 | 0.85 | - | - | | - | - | - |

Combining faster R-CNN and

U-net[54] | 2018 | PyTorch | MM-WHS2017 | 0.879 | 0.902 | 0.822 | | - | - | - |

| CNN[102] | 2018 | TensorFlow | 11例临床病例 | 0.878 | 0.829 | - | | - | - | - |

| Hybrid loss guided CNN[65] | 2018 | TensorFlow | MM-WHS2017 | 0.8680 | 0.7143 | 0.665 | | - | - | - |

CNN and anatomical label

configurations[94] | 2018 | Caffe | MM-WHS2017 | 0.918 | 0.909 | 0.881 | | - | - | - |

| 3D deeply-supervised U-Net[55] | 2018 | - | MM-WHS2017 | 0.893 | 0.810 | 0.837 | | - | - | - |

| DL and shape context[59] | 2018 | Keras | MM-WHS2017 | 0.935 | 0.825 | 0.879 | | - | - | - |

Multi-planar deep segmentation

networks[99] | 2018 | TensorFlow | MM-WHS2017 | 0.904 | 0.883 | 0.851 | | - | - | - |

| 3D CNN[103] | 2018 | TensorFlow | MM-WHS2017 | 0.923 | 0.857 | 0.856 | | - | - | - |

| Two-stage 3D U-net[56] | 2018 | TensorFlow | MM-WHS2017 | 0.800 | 0.786 | 0.729 | | - | - | - |

| Multi-depth fusion network[58] | 2019 | TensorFlow | MICCAI 2017全心

CT数据集 | 0.944 | 0.895 | 0.889 | | - | - | - |

3D deeply supervised attention

U-net[57] | 2020 | MATLAB | 100例临床病例 | 0.916 | - | - | | 6.840 | - | - |

| DL[66] | 2020 | - | 1100例临床数据 | - | - | 0.883 | | - | - | 13.4 |

| Unet-GAN[98] | 2021 | PyTorch | MM-WHS2017 | 整体平均0.889 | | | | |

Multiple GAN guided by

Self-attention mechanism[97] | 2021 | - | MM-WHS2017 | 0.814 | - | 0.669 | | - | - | - |

| AttU_Net_conv1_5Mffp[62] | 2021 | PyTorch | MM-WHS2017 | 0.907 | 0.842 | 0.906 | | - | - | - |

| PC-Unet[60] | 2021 | - | 20例临床数据 | 0.885 | - | - | | 7.05 | - | - |

Computer graphics imaging

and DL[129] | 2022 | - | 130例临床数据 | - | 0.81~0.95 | - | | - | - | - |

| DRLSE[25] | 2022 | - | 5例临床数据 | 0.9253 | - | - | | 7.874 | - | - |

| 4D contrast-enhanced[104] | 2022 | PyTorch | 1509例临床数据 | 整体平均0.8 | | - | - | - |

| MRDFF[95] | 2022 | - | MM-WHS2017 | 0.899 | 0.823 | - | | - | - | - |

| Transnunet[64] | 2022 | - | MM-WHS2017 | 0.921 | - | - | | - | - | - |

| Self-attention mechanism[45] | 2023 | TensorFlow | 96例临床病例 | - | - | 0.9202 | | - | - | - |

4.3 UCG图像分割方法总结UCG图像分割研究中,常用的几何变形、水平集和活动轮模型基于数学和物理原理,在低清晰度的UCG图像中不能有效进行边界轮廓的检测,而且需要人工介入的初始化.在DL方式中,U-Net依旧是主流网络.此外,UCG应用于胎儿检测,能实现先天性心脏病等的快速筛查,提出的动态CNN能显著加快胎儿心室图像分割和测量.表4总结了不同网络模型应用于UCG图像分割时的环境和精度. ...

Study of left atrium segmentation in dual source CT image with random walks algorithms

1

2016

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

基于Random Walks算法的心脏双源CT左心房分割

1

2016

... 心脏CT图像分割中,主要采用ACM和基于图谱的方式,最常用的是基于图谱[22,23]的方式.Yang等[22]第一次利用多图谱方法分割心脏各区域;在此基础上,Galisot等[23]为每个解剖结构创建不同的局部概率图谱,包含先验形状和位置信息,能够更精细的提取结构形状,随后使用马尔可夫随机场进行像素分类,将学习到的先验信息与不同像素强度相结合.最后,使用Adaboost分类器修正区域边界上的像素并提高分割精度.Li等[24]在单张CT图像中使用模糊水平集方法进行粗分割,再使用C-V模型水平集迭代100次细化分割后得到最终结果.Wu等[25]采用DRLSE方法提高LV内外膜的分割精度,在DRLSE方法中重新设计距离正则化项和外部能量项,LV内外膜的Dice系数分别达到了0.925 3和0.968 7,但DRLSE算法对初始化太敏感,分割结果与初始图像和参数设置密切相关,对于每个图像必须单独设置参数并初始化.He等[26]采用形态重构和随机行走相结合的方法应用于LA分割,相比单独使用随机行走方式,优化并减少了种子点的选取,有效避免了局部过分割、欠分割. ...

Automated border detection in three-dimensional echocardiography: principles and promises

1

2010

... 基于UCG图像动态分析整个心动周期的心腔容积对评估左右心室功能有不可替代的优势.在2D和3D UCG图像中分割LV的传统方法主要包括几何形变模型和基于活动轮廓的模型[27,28].但这些算法应用于UCG图像分割时缺乏标准化和公开数据集,限制了模型的评估与比较.Hansegard等[29]提出一种创新算法,该算法将四边形网格的三维主动轮廓模型的参数与Lalman滤波的状态向量相结合,实现空间信息测量与融合.Smistad等[30]采用卡尔曼滤波和边缘检测对每帧网格进行实时自动跟踪,使分割模型的精度有显著提升.Huang等[31]利用椭球模型实现了心腔体积的定量测量,在此基础上,引入涡旋梯度矢量流(Vortical Gradient Vector Flow,VGVF)外力场和贪婪算法对心脏超声图像初始轮廓进行变形处理,与传统Snake模型和GVF Snake模型相比,Huang等提出的模型分割结果更接近于专家手动分割结果. ...

A multiple active contour model for cardiac boundary detection on echocardiographic sequences

1

1996

... 基于UCG图像动态分析整个心动周期的心腔容积对评估左右心室功能有不可替代的优势.在2D和3D UCG图像中分割LV的传统方法主要包括几何形变模型和基于活动轮廓的模型[27,28].但这些算法应用于UCG图像分割时缺乏标准化和公开数据集,限制了模型的评估与比较.Hansegard等[29]提出一种创新算法,该算法将四边形网格的三维主动轮廓模型的参数与Lalman滤波的状态向量相结合,实现空间信息测量与融合.Smistad等[30]采用卡尔曼滤波和边缘检测对每帧网格进行实时自动跟踪,使分割模型的精度有显著提升.Huang等[31]利用椭球模型实现了心腔体积的定量测量,在此基础上,引入涡旋梯度矢量流(Vortical Gradient Vector Flow,VGVF)外力场和贪婪算法对心脏超声图像初始轮廓进行变形处理,与传统Snake模型和GVF Snake模型相比,Huang等提出的模型分割结果更接近于专家手动分割结果. ...

1

2007

... 基于UCG图像动态分析整个心动周期的心腔容积对评估左右心室功能有不可替代的优势.在2D和3D UCG图像中分割LV的传统方法主要包括几何形变模型和基于活动轮廓的模型[27,28].但这些算法应用于UCG图像分割时缺乏标准化和公开数据集,限制了模型的评估与比较.Hansegard等[29]提出一种创新算法,该算法将四边形网格的三维主动轮廓模型的参数与Lalman滤波的状态向量相结合,实现空间信息测量与融合.Smistad等[30]采用卡尔曼滤波和边缘检测对每帧网格进行实时自动跟踪,使分割模型的精度有显著提升.Huang等[31]利用椭球模型实现了心腔体积的定量测量,在此基础上,引入涡旋梯度矢量流(Vortical Gradient Vector Flow,VGVF)外力场和贪婪算法对心脏超声图像初始轮廓进行变形处理,与传统Snake模型和GVF Snake模型相比,Huang等提出的模型分割结果更接近于专家手动分割结果. ...

1

2014

... 基于UCG图像动态分析整个心动周期的心腔容积对评估左右心室功能有不可替代的优势.在2D和3D UCG图像中分割LV的传统方法主要包括几何形变模型和基于活动轮廓的模型[27,28].但这些算法应用于UCG图像分割时缺乏标准化和公开数据集,限制了模型的评估与比较.Hansegard等[29]提出一种创新算法,该算法将四边形网格的三维主动轮廓模型的参数与Lalman滤波的状态向量相结合,实现空间信息测量与融合.Smistad等[30]采用卡尔曼滤波和边缘检测对每帧网格进行实时自动跟踪,使分割模型的精度有显著提升.Huang等[31]利用椭球模型实现了心腔体积的定量测量,在此基础上,引入涡旋梯度矢量流(Vortical Gradient Vector Flow,VGVF)外力场和贪婪算法对心脏超声图像初始轮廓进行变形处理,与传统Snake模型和GVF Snake模型相比,Huang等提出的模型分割结果更接近于专家手动分割结果. ...

Adoption of snake variable model-based method in segmentation and quantitative calculation of cardiac ultrasound medical images

1

2021

... 基于UCG图像动态分析整个心动周期的心腔容积对评估左右心室功能有不可替代的优势.在2D和3D UCG图像中分割LV的传统方法主要包括几何形变模型和基于活动轮廓的模型[27,28].但这些算法应用于UCG图像分割时缺乏标准化和公开数据集,限制了模型的评估与比较.Hansegard等[29]提出一种创新算法,该算法将四边形网格的三维主动轮廓模型的参数与Lalman滤波的状态向量相结合,实现空间信息测量与融合.Smistad等[30]采用卡尔曼滤波和边缘检测对每帧网格进行实时自动跟踪,使分割模型的精度有显著提升.Huang等[31]利用椭球模型实现了心腔体积的定量测量,在此基础上,引入涡旋梯度矢量流(Vortical Gradient Vector Flow,VGVF)外力场和贪婪算法对心脏超声图像初始轮廓进行变形处理,与传统Snake模型和GVF Snake模型相比,Huang等提出的模型分割结果更接近于专家手动分割结果. ...

Gradient-based learning applied to document recognition

1

1998

... 医学图像的获取及使用DL分割图像的基本流程如图2所示.近年来,随着GPU(Graphics Processing Unit)等硬件性能的提升和算法的改进,DL通过自主学习图像特征、捕捉大数据有用信息来提升医学图像的利用率.DL技术的高准确度和鲁棒性,为医学图像分割提供了强有力的工具,同时也帮助医生更快做出诊疗决策,从而提高医疗水平和患者的生存率.目前常用于医学图像分割的DL网络主要有CNN、循环神经网络(Recurrent Neural Network,RNN)、全卷积神经网络(Full Convolution Neural Network,FCN)、U-Net、残差网络(Residual Network,ResNet)和生成对抗网络(Generative Adversarial Network,GAN)等方式.其中,CNN模型最初是Lecun等[32]在1998年提出的用于图像分类的LeNet,由于当时算力有限、数据集不足并且缺乏有效的激活函数,未能实现较好的训练结果,从而限制了CNN的发展.2012年提出的AlexNet模型[33]使用ReLU激活函数、Dropout(在DL训练过程中随机删除部分节点的输出,以减少过拟合)和GPU技术,大幅提升了图像识别准确率,促进了DL分割方法的快速发展,但该网络局限于固定尺寸的图像.为实现任意尺寸的输入输出图像,将输入图像的全局信息进行提取和像素级别的输出预测,2015年Long等提出的FCN[34]是在CNN的基础上将全连接层替换为卷积层;同年,Ronneberger等提出的一种端到端神经网络架构U-Net[35]具有相对简单和固定的结构,广泛应用于小尺寸和多模态数据集.U-Net的主要特征是具有对称的U形结构,包括一个捕捉语义信息的下采样过程(编码器)和一个精准定位的上采样过程(解码器);下采样将图像尺寸逐层减小、以提取颜色、轮廓等图像特征;上采样通过跳跃连接将浅层与深层特征进行融合,同时进行反卷积操作将特征图的尺寸还原为输入图像大小. ...

ImageNet classification with deep convolutional neural networks

1

2017

... 医学图像的获取及使用DL分割图像的基本流程如图2所示.近年来,随着GPU(Graphics Processing Unit)等硬件性能的提升和算法的改进,DL通过自主学习图像特征、捕捉大数据有用信息来提升医学图像的利用率.DL技术的高准确度和鲁棒性,为医学图像分割提供了强有力的工具,同时也帮助医生更快做出诊疗决策,从而提高医疗水平和患者的生存率.目前常用于医学图像分割的DL网络主要有CNN、循环神经网络(Recurrent Neural Network,RNN)、全卷积神经网络(Full Convolution Neural Network,FCN)、U-Net、残差网络(Residual Network,ResNet)和生成对抗网络(Generative Adversarial Network,GAN)等方式.其中,CNN模型最初是Lecun等[32]在1998年提出的用于图像分类的LeNet,由于当时算力有限、数据集不足并且缺乏有效的激活函数,未能实现较好的训练结果,从而限制了CNN的发展.2012年提出的AlexNet模型[33]使用ReLU激活函数、Dropout(在DL训练过程中随机删除部分节点的输出,以减少过拟合)和GPU技术,大幅提升了图像识别准确率,促进了DL分割方法的快速发展,但该网络局限于固定尺寸的图像.为实现任意尺寸的输入输出图像,将输入图像的全局信息进行提取和像素级别的输出预测,2015年Long等提出的FCN[34]是在CNN的基础上将全连接层替换为卷积层;同年,Ronneberger等提出的一种端到端神经网络架构U-Net[35]具有相对简单和固定的结构,广泛应用于小尺寸和多模态数据集.U-Net的主要特征是具有对称的U形结构,包括一个捕捉语义信息的下采样过程(编码器)和一个精准定位的上采样过程(解码器);下采样将图像尺寸逐层减小、以提取颜色、轮廓等图像特征;上采样通过跳跃连接将浅层与深层特征进行融合,同时进行反卷积操作将特征图的尺寸还原为输入图像大小. ...

1

2015

... 医学图像的获取及使用DL分割图像的基本流程如图2所示.近年来,随着GPU(Graphics Processing Unit)等硬件性能的提升和算法的改进,DL通过自主学习图像特征、捕捉大数据有用信息来提升医学图像的利用率.DL技术的高准确度和鲁棒性,为医学图像分割提供了强有力的工具,同时也帮助医生更快做出诊疗决策,从而提高医疗水平和患者的生存率.目前常用于医学图像分割的DL网络主要有CNN、循环神经网络(Recurrent Neural Network,RNN)、全卷积神经网络(Full Convolution Neural Network,FCN)、U-Net、残差网络(Residual Network,ResNet)和生成对抗网络(Generative Adversarial Network,GAN)等方式.其中,CNN模型最初是Lecun等[32]在1998年提出的用于图像分类的LeNet,由于当时算力有限、数据集不足并且缺乏有效的激活函数,未能实现较好的训练结果,从而限制了CNN的发展.2012年提出的AlexNet模型[33]使用ReLU激活函数、Dropout(在DL训练过程中随机删除部分节点的输出,以减少过拟合)和GPU技术,大幅提升了图像识别准确率,促进了DL分割方法的快速发展,但该网络局限于固定尺寸的图像.为实现任意尺寸的输入输出图像,将输入图像的全局信息进行提取和像素级别的输出预测,2015年Long等提出的FCN[34]是在CNN的基础上将全连接层替换为卷积层;同年,Ronneberger等提出的一种端到端神经网络架构U-Net[35]具有相对简单和固定的结构,广泛应用于小尺寸和多模态数据集.U-Net的主要特征是具有对称的U形结构,包括一个捕捉语义信息的下采样过程(编码器)和一个精准定位的上采样过程(解码器);下采样将图像尺寸逐层减小、以提取颜色、轮廓等图像特征;上采样通过跳跃连接将浅层与深层特征进行融合,同时进行反卷积操作将特征图的尺寸还原为输入图像大小. ...

U-net: Convolutional networks for biomedical image segmentation

1

2015

... 医学图像的获取及使用DL分割图像的基本流程如图2所示.近年来,随着GPU(Graphics Processing Unit)等硬件性能的提升和算法的改进,DL通过自主学习图像特征、捕捉大数据有用信息来提升医学图像的利用率.DL技术的高准确度和鲁棒性,为医学图像分割提供了强有力的工具,同时也帮助医生更快做出诊疗决策,从而提高医疗水平和患者的生存率.目前常用于医学图像分割的DL网络主要有CNN、循环神经网络(Recurrent Neural Network,RNN)、全卷积神经网络(Full Convolution Neural Network,FCN)、U-Net、残差网络(Residual Network,ResNet)和生成对抗网络(Generative Adversarial Network,GAN)等方式.其中,CNN模型最初是Lecun等[32]在1998年提出的用于图像分类的LeNet,由于当时算力有限、数据集不足并且缺乏有效的激活函数,未能实现较好的训练结果,从而限制了CNN的发展.2012年提出的AlexNet模型[33]使用ReLU激活函数、Dropout(在DL训练过程中随机删除部分节点的输出,以减少过拟合)和GPU技术,大幅提升了图像识别准确率,促进了DL分割方法的快速发展,但该网络局限于固定尺寸的图像.为实现任意尺寸的输入输出图像,将输入图像的全局信息进行提取和像素级别的输出预测,2015年Long等提出的FCN[34]是在CNN的基础上将全连接层替换为卷积层;同年,Ronneberger等提出的一种端到端神经网络架构U-Net[35]具有相对简单和固定的结构,广泛应用于小尺寸和多模态数据集.U-Net的主要特征是具有对称的U形结构,包括一个捕捉语义信息的下采样过程(编码器)和一个精准定位的上采样过程(解码器);下采样将图像尺寸逐层减小、以提取颜色、轮廓等图像特征;上采样通过跳跃连接将浅层与深层特征进行融合,同时进行反卷积操作将特征图的尺寸还原为输入图像大小. ...

Automated left and right ventricular chamber segmentation in cardiac magnetic resonance images using dense fully convolutional neural network

2

2021

... 在增强跳跃连接方式中,Penso等[36]提出将RV和LV的自动分割采用重新设计跳跃连接的U-Net架构,引入了密集块缓解U-Net编码器和解码器之间的语义鸿沟,将每一层与前一层的特征映射连接起来增加并保留特征信息.Wang等[37]在编码器部分融合了压缩激励模块及残差模块,使得跳跃连接能够在解码器特征融合时收集到更多的边缘细节,降低了小梁肌、乳头肌对分割带来的影响. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

Squeeze-and-excitation residual U-shaped network for left myocardium segmentation based on cine cardiac magnetic resonance images

1

2023

... 在增强跳跃连接方式中,Penso等[36]提出将RV和LV的自动分割采用重新设计跳跃连接的U-Net架构,引入了密集块缓解U-Net编码器和解码器之间的语义鸿沟,将每一层与前一层的特征映射连接起来增加并保留特征信息.Wang等[37]在编码器部分融合了压缩激励模块及残差模块,使得跳跃连接能够在解码器特征融合时收集到更多的边缘细节,降低了小梁肌、乳头肌对分割带来的影响. ...

基于心脏磁共振电影图像的压缩激励残差U形网络左心肌分割

1

2023

... 在增强跳跃连接方式中,Penso等[36]提出将RV和LV的自动分割采用重新设计跳跃连接的U-Net架构,引入了密集块缓解U-Net编码器和解码器之间的语义鸿沟,将每一层与前一层的特征映射连接起来增加并保留特征信息.Wang等[37]在编码器部分融合了压缩激励模块及残差模块,使得跳跃连接能够在解码器特征融合时收集到更多的边缘细节,降低了小梁肌、乳头肌对分割带来的影响. ...

Cardiac MRI segmentation with a dilated CNN incorporating domain-specific constraints

4

2020

... 在改进损失函数方式中,Simantiris等[38]提出新的平滑损失函数减少网络过拟合;相比同样层数其它U-Net,该网络训练较少的批次就能有很好的损失收敛.Cui等[39]引入了Focal Tversky损失函数,该函数增加权重调节因子,通过减少简单样本的权重来增强ROI较小的复杂样本特征学习,有效地解决了心脏图像分割过程中目标和背景之间数据量严重不平衡的问题. ...

... 在增加局部模块方式中.Zhang等[45]在U-Net中引入注意力机制,能够同时分割血池和心肌,并且实现了三维U-Net精细分割LV,最后在96例临床数据中进行网络训练和验证,获得Dice系数为0.944 3.Simantiris等[38]在数据增强的基础上利用膨胀卷积进行语义分割,首先利用3D马尔可夫随机场对心脏进行ROI提取,膨胀卷积能够在较少的参数中提高整个网络层的定位精度.文献[39,40]使用多尺度注意力模块,其中Dong等[40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... 在计算机硬件性能提高和数据集扩充下,基于DL的分割算法逐渐超过了最先进的传统算法,表2对不同网络的分割性能进行总结.目前常用于CMRI图像分割的网络大多数基于U-Net框架,在此基础上针对不同数据进行模块改良,在保证分割精度的前提下降低训练参数并且加快训练速度.文献[38,39,47,124⇓⇓-127]研究了不同的损失函数,主要有加权交叉熵、加权Dice、深度监督和焦点损失函数,用于提高分割性能.在基于FCN的方法中,大多数方法使用2D网络而不是3D网络,因为CMRI低分辨率和运动伪影,这限制了3D网络的适用性.由于传统算法具有运算速度快,可以优化分割细节的特点,文献[79⇓⇓-82]采用传统算法与DL算法相结合的方式提升分割精度,并取得良好效果. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

Multiscale attention guided U-Net architecture for cardiac segmentation in short-axis MRI images

5

2021

... 在改进损失函数方式中,Simantiris等[38]提出新的平滑损失函数减少网络过拟合;相比同样层数其它U-Net,该网络训练较少的批次就能有很好的损失收敛.Cui等[39]引入了Focal Tversky损失函数,该函数增加权重调节因子,通过减少简单样本的权重来增强ROI较小的复杂样本特征学习,有效地解决了心脏图像分割过程中目标和背景之间数据量严重不平衡的问题. ...

... 在增加局部模块方式中.Zhang等[45]在U-Net中引入注意力机制,能够同时分割血池和心肌,并且实现了三维U-Net精细分割LV,最后在96例临床数据中进行网络训练和验证,获得Dice系数为0.944 3.Simantiris等[38]在数据增强的基础上利用膨胀卷积进行语义分割,首先利用3D马尔可夫随机场对心脏进行ROI提取,膨胀卷积能够在较少的参数中提高整个网络层的定位精度.文献[39,40]使用多尺度注意力模块,其中Dong等[40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... [39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... 在计算机硬件性能提高和数据集扩充下,基于DL的分割算法逐渐超过了最先进的传统算法,表2对不同网络的分割性能进行总结.目前常用于CMRI图像分割的网络大多数基于U-Net框架,在此基础上针对不同数据进行模块改良,在保证分割精度的前提下降低训练参数并且加快训练速度.文献[38,39,47,124⇓⇓-127]研究了不同的损失函数,主要有加权交叉熵、加权Dice、深度监督和焦点损失函数,用于提高分割性能.在基于FCN的方法中,大多数方法使用2D网络而不是3D网络,因为CMRI低分辨率和运动伪影,这限制了3D网络的适用性.由于传统算法具有运算速度快,可以优化分割细节的特点,文献[79⇓⇓-82]采用传统算法与DL算法相结合的方式提升分割精度,并取得良好效果. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

DeU-Net 2.0: Enhanced deformable U-Net for 3D cardiac cine MRI segmentation

4

2022

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... 在增加局部模块方式中.Zhang等[45]在U-Net中引入注意力机制,能够同时分割血池和心肌,并且实现了三维U-Net精细分割LV,最后在96例临床数据中进行网络训练和验证,获得Dice系数为0.944 3.Simantiris等[38]在数据增强的基础上利用膨胀卷积进行语义分割,首先利用3D马尔可夫随机场对心脏进行ROI提取,膨胀卷积能够在较少的参数中提高整个网络层的定位精度.文献[39,40]使用多尺度注意力模块,其中Dong等[40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... [40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

Automatic segmentation of left ventricle using parallel end-end deep convolutional neural networks framework

2

2020

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

3

2019

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... [42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

Automatic segmentation of right ventricle in cine cardiac magnetic resonance image based on a dense and multi-scale U-net method

3

2020

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... [43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

基于密集多尺度U-net网络的电影心脏磁共振图像右心室自动分割

3

2020

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... [43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

Automatic right ventricular segmentation for cine cardiac magnetic resonance images based on a new deep atlas network

2

2023

... 在强化U形路径方式中,Dong等[40]提出增强可变形网络用于3D CMRI图像分割,它由时间可变形聚集模块、增强可变形注意力网络和概率噪声校正模块构成.时间可变形聚集模块以连续的CMRI切片作为输入,通过偏移预测网络提取时空信息,生成目标切片的融合特征,然后将融合后的特征输入到增强可变形注意力网络中,生成每层分割图的清晰边界.Dong等[41]提出由两个交互式子网络组成的并行U-Net用于LV分割,提取的特征可以在子网络之间传递,提高了特征的利用率;此外,将多任务学习融入到网络设计中提升了网络泛化能力.文献[42,43]采用多尺度U-Net实现分割,其中Wang等[42]提出了多尺度统计U-Net用于CMRI三维数据分割,输入样本建模为多尺度标准形分布,将多尺度数据采样方法与U-Net相结合,充分利用时空相关性来处理数据,与普通U-Net及改进的GridNet方法相比,该网络实现了268%和237%的加速,Dice系数提高了1.6%和3.6%.Liu等[43]提出密集多尺度U-Net用于RV分割,将数据归一化并增强后采用该网络进行特征提取,相比U-Net在收缩末期精度显著提升,在此基础上,Wang等[44]采用密集多尺度U-Net融合多图谱算法实现分割,其中密集多尺度U-Net提取图谱集和目标图像之间的转换参数,将图谱集标签映射到目标图像且该过程有两个损失函数进行约束,在RV分割评估中优于U-Net++等方式. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |

| Deep CNN[41] | 2020 | TensorFlow | MICCAI2009 | 0.961 | 0.949 | 0.867 | | - | - | - |

| DMU-net[43] | 2020 | Keras | 71例临床数据 | - | - | - | | - | 4.445 | - |

| Semi-supervised[47] | 2021 | PyTorch | M&Ms | 0.909 | 0.879 | 0.845 | | 9.42 | 12.65 | 11.85 |

Active contour

models[82] | 2021 | - | ACDC、LVQuan18 | 0.890

0.805 | - | - | | 12.247

19.717 | - | - |

Deep reinforcement

learning[53] | 2021 | - | ACDC、

Sunnybrook2009 | 0.9502

0.9351 | - | - | | - | - | - |

| Attention guided U-Net[39] | 2021 | TensorFlow | LVSC | - | - | 0.956 | | - | - | 1.456 |

| Dens FCN[36] | 2021 | TensorFlow | 210例临床数据 | 0.944 | 0.908 | 0.851 | | 7.2 | 7.35 | 5.9 |

| SegNet[85] | 2022 | TensorFlow | 1354例临床数据 | 0.878 | - | - | | 10.163 | - | - |

| FCN[51] | 2022 | - | 150例临床数据 | 0.930 | - | - | | - | - | - |

Cascade approach

structures[49] | 2022 | TensorFlow | ACDC | 0.963 | 0.900 | 0.894 | | 8.062 | 14.660 | 7.906 |

| DEU-Net2.0[40] | 2022 | PyTorch | ACDC | 0.970 | 0.949 | 0.904 | | 7.0 | 12.2 | 9.0 |

RNN with Atrous Spatial

pyramid pooling[86] | 2022 | PyTorch | 56例临床数据 | - | - | 0.8543 | | - | - | - |

| OSFNet[90] | 2022 | TensorFlow | ACDC | 0.946 | - | - | | 3.976 | - | - |

| Deep Atlas network[44] | 2023 | TensorFlow | 71例临床数据 | - | 0.902 | - | | - | 4.358 | - |

4.2 心脏CT图像分割方法总结心脏CT图像分割的传统算法中最常用的是基于图谱的方式,但此类算法存在初始化敏感问题.在DL方式中,U-Net依旧是使用最多的基础网络,通过优化损失函数和强化U形结构能显著提升分割性能.此外,还介绍了采用级联和Transformer的改进方式.近两年来,Transformer与其他网络的结合成为研究热点,在参数不增加的条件下提高了分割精度.表3总结对比了用于心脏CT图像分割的不同模型性能. ...

An automatic segmentation method with self-attention mechanism on left ventricle in gated PET/CT myocardial perfusion imaging

2

2023

... 在增加局部模块方式中.Zhang等[45]在U-Net中引入注意力机制,能够同时分割血池和心肌,并且实现了三维U-Net精细分割LV,最后在96例临床数据中进行网络训练和验证,获得Dice系数为0.944 3.Simantiris等[38]在数据增强的基础上利用膨胀卷积进行语义分割,首先利用3D马尔可夫随机场对心脏进行ROI提取,膨胀卷积能够在较少的参数中提高整个网络层的定位精度.文献[39,40]使用多尺度注意力模块,其中Dong等[40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... Summary of different segmentation networks based on cardiac CT images

Table 3 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| CNN[93] | 2016 | - | 60例临床病例 | 0.85 | - | - | | - | - | - |

Combining faster R-CNN and

U-net[54] | 2018 | PyTorch | MM-WHS2017 | 0.879 | 0.902 | 0.822 | | - | - | - |

| CNN[102] | 2018 | TensorFlow | 11例临床病例 | 0.878 | 0.829 | - | | - | - | - |

| Hybrid loss guided CNN[65] | 2018 | TensorFlow | MM-WHS2017 | 0.8680 | 0.7143 | 0.665 | | - | - | - |

CNN and anatomical label

configurations[94] | 2018 | Caffe | MM-WHS2017 | 0.918 | 0.909 | 0.881 | | - | - | - |

| 3D deeply-supervised U-Net[55] | 2018 | - | MM-WHS2017 | 0.893 | 0.810 | 0.837 | | - | - | - |

| DL and shape context[59] | 2018 | Keras | MM-WHS2017 | 0.935 | 0.825 | 0.879 | | - | - | - |

Multi-planar deep segmentation

networks[99] | 2018 | TensorFlow | MM-WHS2017 | 0.904 | 0.883 | 0.851 | | - | - | - |

| 3D CNN[103] | 2018 | TensorFlow | MM-WHS2017 | 0.923 | 0.857 | 0.856 | | - | - | - |

| Two-stage 3D U-net[56] | 2018 | TensorFlow | MM-WHS2017 | 0.800 | 0.786 | 0.729 | | - | - | - |

| Multi-depth fusion network[58] | 2019 | TensorFlow | MICCAI 2017全心

CT数据集 | 0.944 | 0.895 | 0.889 | | - | - | - |

3D deeply supervised attention

U-net[57] | 2020 | MATLAB | 100例临床病例 | 0.916 | - | - | | 6.840 | - | - |

| DL[66] | 2020 | - | 1100例临床数据 | - | - | 0.883 | | - | - | 13.4 |

| Unet-GAN[98] | 2021 | PyTorch | MM-WHS2017 | 整体平均0.889 | | | | |

Multiple GAN guided by

Self-attention mechanism[97] | 2021 | - | MM-WHS2017 | 0.814 | - | 0.669 | | - | - | - |

| AttU_Net_conv1_5Mffp[62] | 2021 | PyTorch | MM-WHS2017 | 0.907 | 0.842 | 0.906 | | - | - | - |

| PC-Unet[60] | 2021 | - | 20例临床数据 | 0.885 | - | - | | 7.05 | - | - |

Computer graphics imaging

and DL[129] | 2022 | - | 130例临床数据 | - | 0.81~0.95 | - | | - | - | - |

| DRLSE[25] | 2022 | - | 5例临床数据 | 0.9253 | - | - | | 7.874 | - | - |

| 4D contrast-enhanced[104] | 2022 | PyTorch | 1509例临床数据 | 整体平均0.8 | | - | - | - |

| MRDFF[95] | 2022 | - | MM-WHS2017 | 0.899 | 0.823 | - | | - | - | - |

| Transnunet[64] | 2022 | - | MM-WHS2017 | 0.921 | - | - | | - | - | - |

| Self-attention mechanism[45] | 2023 | TensorFlow | 96例临床病例 | - | - | 0.9202 | | - | - | - |

4.3 UCG图像分割方法总结UCG图像分割研究中,常用的几何变形、水平集和活动轮模型基于数学和物理原理,在低清晰度的UCG图像中不能有效进行边界轮廓的检测,而且需要人工介入的初始化.在DL方式中,U-Net依旧是主流网络.此外,UCG应用于胎儿检测,能实现先天性心脏病等的快速筛查,提出的动态CNN能显著加快胎儿心室图像分割和测量.表4总结了不同网络模型应用于UCG图像分割时的环境和精度. ...

A deep learning-based approach for automatic segmentation and quantification of the left ventricle from cardiac cine MR images

4

2020

... 在增加局部模块方式中.Zhang等[45]在U-Net中引入注意力机制,能够同时分割血池和心肌,并且实现了三维U-Net精细分割LV,最后在96例临床数据中进行网络训练和验证,获得Dice系数为0.944 3.Simantiris等[38]在数据增强的基础上利用膨胀卷积进行语义分割,首先利用3D马尔可夫随机场对心脏进行ROI提取,膨胀卷积能够在较少的参数中提高整个网络层的定位精度.文献[39,40]使用多尺度注意力模块,其中Dong等[40]提出基于增强注意变形网络的多尺度注意力模块来捕捉不同尺度特征之间的长程依赖关系.同时概率噪声校正模块将融合后的特征作为一个分布来量化不确定性.Cui等[39]提出的网络屏蔽背景的同时全自动学习目标结构,并将挤压-激励(Squeeze-and-Excitation,SE)模块[46]与主干网络融合构成SEnet结构,实现不同数据集上的有效训练,提高模型泛化能力.Zhang等[47]以半监督方式利用未标记数据,采用标签传播和迭代细化生成未标记图像的伪标签,再使用人工标签和伪标签进行训练并在人工标签数据上进行微调. ...

... FCN中的编码器-解码器结构,使得FCN可以接受任意大小的输入并产生相同大小的输出.Tran等[48]第一次将FCN结构应用于CMRI心室的语义分割.文献[46,49,50]采用级联的方式先提取感兴趣区域(Region of Interest,ROI),再进行分割任务,其中Da Silva等[49]提出的分割网络在收缩路径中采用B3模块,扩展路径中使用跳跃连接和注意力模块,最后采用U-Net对分割图像进行后处理,优化分割结果.Abdeltawab等[46]在提取ROI的基础上提出新的径向损失函数改进的FCN实现心室与心肌的分割,在更少参数训练下使LV的预测轮廓与真实轮廓之间的距离最小.Wu等[50]使用FCN进行ROI区域的确定后使用U-Net模型进行LV分割并在MICCAI 2009数据集上进行测试. ...

... [46]在提取ROI的基础上提出新的径向损失函数改进的FCN实现心室与心肌的分割,在更少参数训练下使LV的预测轮廓与真实轮廓之间的距离最小.Wu等[50]使用FCN进行ROI区域的确定后使用U-Net模型进行LV分割并在MICCAI 2009数据集上进行测试. ...

... Summary of segmentation networks based on CMRI images

Table 2 | 方法 | 时间 | 学习框架 | 数据集 | Dice系数 | | Hausdorff距离(HD)/mm |

左心室

(LV) | 右心室

(RV) | 心肌

(Myo) | 左心室

(LV) | 右心室

(RV) | 心肌

(Myo) |

| Active contour models[87] | 2019 | TensorFlow | ACDC | 0.986 | 0.940 | 0.969 | | 4.73 | 5.95 | 5.42 |

| MSU-Net[42] | 2019 | TensorFlow | ACDC | 0.897 | 0.855 | 0.836 | | - | - | - |

| 3D high resolution[92] | 2019 | TensorFlow | 1912例临床数据 | 0.8792 | - | - | | 3.99 | - | |

Dynamic pixel-wise

weighting-FCN[52] | 2020 | TensorFlow | MICCAI 2013 | - | - | 0.803 | | - | - | - |

FCN for left ventricle

segmentation[128] | 2020 | - | MICCAI2009

33例临床数据 | 0.95 | - | 0.914 | | - | - | - |

CNN incorporating

domain-specific

constraints[38] | 2020 | TensorFlow | ACDC | 0.959 | 0.924 | 0.873 | | - | - | - |

Combined CNN and

U-net[50] | 2020 | PyTorch | MICCAI2009 | 0.951 | - | - | | 3.641 | - | - |

Automatic segmentation

and quantification[46] | 2020 | PyTorch | ACDC、临床数据 | 0.96 | - | 0.88 | | 6.31 | - | 7.11 |

Fully automatic

segmentation of RV

and LV[88] | 2020 | PyTorch | ACDC、5570例

临床数据 | 0.927 | 0.873 | - | | - | - | - |